This paper provides a precis of my current research project, which aims to articulate a media-philosophical rationale for the generalised function of scale within computational culture. Broadly, I identify scale as the simultaneous question and solution thrown up by what I call the ‘transsystematic’: the constitutive impasses of systematicity that become evident with the formalisation and generalisation of computation as a media technology. Scale indexes, and provides a way through, the impasses and contradictions of qualitative and quantitative difference, analog and digital, totality and multiplicity, necessity and contingency, structure and play that are thrown into indelible relief by computation. Yet scale itself remains a slippery concept, endlessly evoked yet situated poorly in our epistemic frameworks. Engaging with scale as a fundamental media-philosophic concept may therefore shed light on ways beyond some of the crucial theoretical impasses of computational culture.

Scale, Systematicity, Computation, Contingency, Technoculture, Media Philosophy.

Scale has come to occupy a central place within computational culture. Variously invoked as a value, problem, method, and even a kind of theodicy1, scale is a ubiquitous figure within contemporary discussions of technology and, more broadly, the systems that organise life on this planet2. My research seeks to articulate a media-philosophical account of why and how scale is central to contemporary technoculture in these ways: what underpinning function does scale perform to necessitate its ubiquity? The essential claim I argue for here is that scale and scaling techniques have become a transcendental condition for how the impasses and limits of systematicity are mediated.

Breaking the argument down very schematically, it proceeds like this:

Scale, in one form or another, has become a ubiquitous concept, either implicitly or explicitly, across nearly every discipline of systematic inquiry today, from the sciences to the humanities.

While there is an enormous amount of work that describes various instances of scale and scaling, and an emerging literature in media theory and philosophy focussing on more rigorously defining scale as a general concept, there has yet to be a strong account of why scale becomes so central and pervasive in computational culture

To this end, I argue that scale’s primary function – and why it has become so necessary – is that scale is simultaneously the problem and solution thrown up by a generalised crisis of systematicity that emerges alongside the ascendancy of computation as paradigmatic media-technology. Scale lets us be systematic, without requiring recourse to a total or complete systematicity.

To clarify the concept, I take a ‘scale’ as any situation where some kind of zone or region of relative commensurability exists or has been constructed. To identify a scale is to posit some particular formal (or informal) set of constraints, or a region where things exist together in some kind of comparable, systematic way. So, we can identify, for instance, the ‘human scale’, where a set of perceptual, cognitive, spatial, and temporal categories and modes generally hold. Or, we might identify a scale of measurement, for which certain variables, certain dimensions of reality become legible and composable – a chemical scale, which can be both very small and very large, such as the scale of atmospheric CO2, which entails a set of dynamic properties. Thus, scale isn’t just smaller or larger – it’s not just a question of space and time in linear terms, but a dimensional question of what aspects of reality are available to relation within certain bounds.

Zachary Horton offers a useful definition of scale that synthesises many of these concerns: given a system with at least two different poles or modalities – which is to say a system where there’s some kind of difference and thus relation - “scale emerges as the limited set of features that such a system can differentiate”.3 In this sense, then, scale is a fundamental aspect of how we do basically any kind of mediation: before we can pick out what things to measure in an experiment, before we can identify what things to pay attention to in a scene, before we can think adequately about what categories we might need to make of something, we need to have implicitly explicitly identified a scale within which we can work. There must be a frame, a ground, or a situation in which such pragmatics can be worked through.

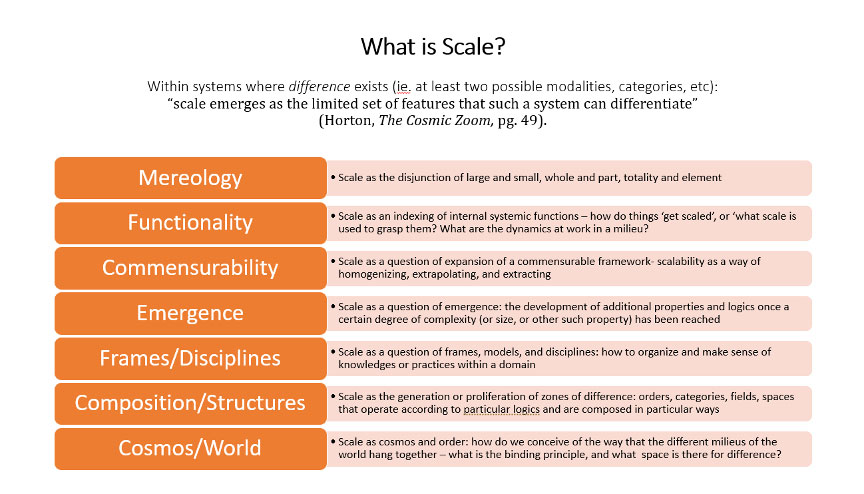

Fig. 1. Table of conceptual functions that scale performs

So, where does scale occur? Now, given this definition I’ve just outlined, you could very well say ‘basically everywhere’. But today I’ll highlight two main phenomena that’ve made scale of particular interest to scholars in recent years.

First, is climate: As Dipesh Chakrabarty writes, quite neatly, “The Anthropocene, one might say, is all about scale.”4 It is, variously: the bridging of multiple size scales, from molecules to biomes to the atmosphere; it relies on multiple scales of scientific practice, from biochemical interactions to planetary fluid dynamics; it involves the suturing of phenomenological scales, from personal experience of local weather patterns to the collective, distributed experience of ongoing crises; it entails multiple political and organizational scales, from individual consumption habits to national environmental protection regulations to international agreements, regional intergovernmental ‘blocs’, and global market trends; even the name itself is a spatio-temporal ‘scaling’ of phenomena, carving an epoch out of geological timescales. The Anthropocene concept, then, serves to indicate both the crossing of a threshold of size and scope, a transitive spatio-temporal effect, and the imbrication of many distinct fields and forces in producing this outcome.

Crucially, this lets us see the central scalar problem: that on the one hand, addressing climate catastrophe involves trying to perform a kind of scalar collapse: to articulate a global problem, a shared response, a planetary system in crisis across all scales; on the other hand, much of what this actually entails is careful navigation and attention to the specificities of particular scales within these frames, the ways that scalar difference is decisive in actually making things happen.

Now, while climate drives a lot of interest in scale, I’m going to focus today on another area of inquiry, which I contend is more epistemologically central to the question of scale, and that’s computation. Where climate functions as the material imposition of scalar phenomena, computation is the mediatic condition by which scale occurs and is processed, organized, known at present. Crucially, it offers us a window into the central reason for scale’s significance today: that of the crisis of systematicity.

First we can note that scale is an omnipresent issue for computational aesthetics: computational processes occur at spatial and temporal scales far smaller and faster than the human perception. This necessitates we come up with various aesthetic schemas of representation to make these processes legible at our scale. Additionally, computational processes rely on the construction and segmentation of various scales themselves, from the originary regularization of states of change in the transistor5, to its representation in machine code, to higher programming languages and interfaces6. As Wendy Chun argues, while there might be a formal or logical equivalence between layers in software stacks, “one cannot run source code: it must be compiled or interpreted. This compilation or interpretation — this making executable of code — is not a trivial action”7 – it entails labour and energy, and frequently involves error, incommensurability, and disjuncture. Computation raises scalar problems in its representation, but also in its very functionality, its very process. We need the trans-scalar functions of computation as much as we need to recognize and work with its radical scalar separations and disjuncture. So, on the one hand you have different scales that operate according to different terms and have different phenomena that are legible within them. But on the other hand computation is so often about leveraging these very differences in scales in order to cut across them, to find solutions that bridge the differences between the world and its representations. One of the main things we want from computation is to scale transitively, to produce a way of cutting across scales or scaling up: we want scalability.

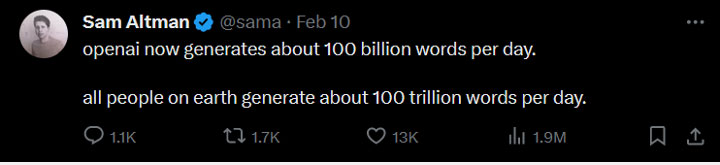

A common example of this is the ideological and technical orientation towards scale in the big computing firms. As Alex Hanna and Tina M. Park write, scalability as a form of growth “has become the default and predominant approach within the current technology sector.”8 The kind of rhetoric that someone like Sam Altman engages in is pretty typical of this, when he says that Open AI “generates about 100 billion words per day”, a figure he juxtaposes with the “100 trillion words per day” generated by “all people on earth”9.

Fig. 2. Tweet / ‘X’ Post by Sam Altman, CEO of Open AI, February 10, 2024

The obvious implication here is fundamentally scalar, where the significance of Open AI’s work is to be evaluated in terms of: (1) its upward trajectory along what is figured as a smooth scale of quantized production (words per day), and (2) its approaching (though without yet achieving) a new scale of operation, a qualitatively significant order of magnitude that can be compared not unfavourably with the total linguistic output of humanity. Open AI’s generative models, Altman implies, approach linguistic profundity at least in principle, by virtue of sheer scale, understood in each of these registers, as a quantitative scaling, and a qualitative shift in the order of scale. In this approach to scale, it’s believed that order and structure can be approximated and unspooled through (1) decomposing any gestalt ‘scale’ (language, art, rationality) into a fundamental scale of basic, quantized, and manipulable units (ie. data-points, parameters, nodes), and (2) iterating the manipulation of these units on a sufficiently vast scale so as to reveal transitive relationships, structures, or tendencies.

Now, I would add that this dual paradigm of scale has importance beyond tech-culture ideology, or even a general ideology of capitalist modernity. I argue it indexes a central tenet at the heart of computational culture: that scale – in some form, pursued for its own sake – will solve the problems of qualitative incommensurability and axiomatic inconsistency that plague systematicity itself. The gambit pursued by so much AI R&D today of adding more scale – in terms of data, parameters, operational layers, compute, etc – with the aim of rendering computable hitherto incomputable systems (ie. language, intuitive reasoning, art) partakes in this general logic, which I argue underpins and conditions most of computational culture today.

So, what is this central problem that underpins the functions of scale today? What is the problem that scale addresses through its navigation of this fundamental antinomy between transitive scalability and incommensurable scalar difference?

Fundamentally, it’s what I call the Transsystematic: the generalised crisis of systematicity that is coterminous with the computational culture that emerges out of the 20th century. This is the broad-based realisation that any given systematisation (as robust, individuated coherence, especially formal coherence) eventually confronts its own condition of operation as non-systematic excess/outside/inconsistency/indeterminacy. Crucially, this constitutive failure is part of how it works. To break it down schematically, the idea of the transsystematic indexes situations where systematicity runs up against its own limits, but functions through and across those limits nevertheless. It thus entails a few basic premises:

Systematicity that is simultaneously complete, closed, and consistent is impossible – such attempts always entail problems of recursion, exclusion, or immanent contradiction.

Systems function not only despite this impossibility of an ‘absolute’ systematicity but via and because of this.

Contingency, the indeterminable, etc, therefore preclude any single, completely totalizing system, but also necessitate that a plurality of such systems (mutually incompatible localities that each possess a certain structural coherence) exist simultaneously and interact.

This, I claim, is a generalised consequence of much of 20th century thought across the natural sciences, mathematics, and humanities. While its roots of could be traced earlier, I follow AA Cavia in situating the decisive moment where the transsystematic becomes undeniable with the failure of Hilbert’s program and the triadic formalisations of computing of Gödel, Turing, and Church10, where mathematics and logic are shown to be premised on an irreducible and constitutive contingency and inconsistency. This crisis of systematicity penetrates nearly every field of systematic inquiry and organised practice in the 20th century, from mathematics, biology, and engineering, to politics, literary theory, and philosophy. For example, we could point to the shift away from strictly formalised computational systems in GOFAI towards open-ended, scale-based processes in contemporary machine learning; we might also see the way that geography has, since the 1980s, had vociferous debates about the ontological nature of scale11; in the humanities more broadly, this narrative is easily traceable in the systematizing ambitions of structuralism, and their critique and destabilisation in the post-structural turn. In every case, attempts at systematising knowledge towards an absolute ground of completeness and consistency founder upon the very conditions of this systematicity. Impasses emerge between the irreducibility of qualitative differences and their decomposition into more simple components, as well as the recursion of basic units of meaning upon themselves.

But, the crucial thing here is that these impasses at the heart of systematisation do not result in the complete failure of systematicity, in that for nearly all pragmatic purposes these systems continue to function adequately. So, I therefore call these impasses, these problems, ‘transsystematic’ – they are situations where systematicity runs up against its own limits, but functions through and across those limits nevertheless. And scale, I argue, is the central means by which this occurs. Rather than trying to produce a complete and total system, one where all possible terms and categories are reconciled within a determinate set of relations and methods, increasingly we turn to crafting local scales, specific scales where we can make things systematic; through constructing a plurality of these scales, we can then – in a heuristic, fuzzy, and indeterminate way – begin to relate these scales to each other.

When seeking examples to illustrate this point, it can be tempting to point to highly complex examples, such as climate modelling or various features of quantum interactions – however, an example from philosopher of science Mark Wilson is much more instructive. Wilson points to the relatively straightforward case of an engineer trying to model a steel beam in a railway bridge12. How is that single beam going to react to a train running over it? Even in this very simple case, it’s impossible to model the interactions of the beam with one, homogenous scale: your computational model needs to be multi-scalar. It needs to have separate and incommensurable sets of mathematical processes for the molecular lattice, the grain structure, the overall hardness and elasticity of the beam.13 Each of these scales entail different behaviours that only emerge at those scales, and cannot be determinately modelled in relation to each other. Instead, you have to model the scales separately, and then – heuristically and indeterminately – run the models up against each other in order to get them to work out a general approximation of the gestalt system. Scale offers us a method to reconcile irreducible systematic differences, while preserving those differences as different.

So, in this sense, we can say that scale emerges in response to the crisis of systematicity because scale lets us provisionally navigate transsystematic problems: in the absence of a complete or total systematicity, but needing to be systematic nevertheless, disciplines increasingly turn to how we can construct localities, and mediate them as generalities. Scale is revealed to be central to the epistemology of computational culture because it is the central concept that lets us relate across its fundamental antinomy, of the digital and the analog, the systematic and the inconsistent, the structured and the open.

The implication here is that we can neither treat scale as some catch-all solution, a transitive, smooth scaling as in the Californian ideology of scalability. But, neither can we treat scale, as Bruno Latour has argued14, as something that we should throw out, saying “connectivity, yes; scale, no”15, and instead attending simply to assemblages of relations as relations. We cannot reject scale as a condition that would be operative prior to the relational assemblage of actors into networks, as this ensures that scale can never be meaningfully articulated on its own terms, as a systematic condition. Instead, I argue we need to consider scale in this transsystematic guise: as a fundamental condition of how systems come to be, and how we can navigate their indeterminacy and incommensurability. As Bernard Stiegler puts it: “the question of system… must be redefined… in terms of a realism of relations and an analysis of processes of individuation that are woven as relations of scale and orders of magnitude”16. By treating scale in this way, as a fundamental and constitutive condition of systematicity, we can begin to more adequately account for the work it performs in the world.

Cavia, AA. Logiciel: Six Seminars on Computational Reason. Berlin: &&& Publishing, 2022.

Chakrabarty, Dipesh. “Afterword: On Scale and Deep History in the Anthropocene.” In Narratives of Scale in the Anthropocene: Imagining Human Responsibility in an Age of Scalar Complexity, 226–32, 2021.

Chun, Wendy Hui Kyong. Programmed Visions: Software and Memory. London: The MIT Press, 2011.

Coen, Deborah. Climate in Motion: Science, Empire, and the Problem of Scale. University of Chicago Press, 2018. https://doi.org/10.3197/096734019x15755402985677.

Dürbeck, Gabriele, and Philip Hüpkes, eds. Narratives of Scale in the Anthropocene: Imagining Human Responsibility in an Age of Scalar Complexity. Narratives of Scale in the Anthropocene. New York and London: Routledge, 2021. https://doi.org/10.4324/9781003136989.

Gauthier, David. “Machine Language and the Legibility of the Zwischen.” In Legibility in the Age of Signs and Machines, edited by Pepita Hesselberth, Janna Houwen, Esther Peeren, and Ruby de Vos, 147–65. Brill, 2018.

Hanna, Alex, and Tina M Park. “Against Scale: Provocations and Resistances to Scale Thinking.” In CSCW Workshop ’20. Virtual: Association for Computing Machinery, 2020.

Hecht, Gabrielle. “Interscalar Vehicles for an African Anthropocene - On Waste, Temporality, and Violence.” Cultural Anthropology 33, no. 1 (2018): 109–41. https://doi.org/10.14506/ca33.1.05.

Herod, Andrew. Scale. New York: Routledge, 2011.

Horton, Zachary. The Cosmic Zoom: Scale, Knowledge, and Mediation. Chicago and London: University of Chicago Press, 2021.

Latour, Bruno. “Anti-Zoom.” In Scale in Literature and Culture, edited by Michael Tavel Clarke and David Wittenberg, 93–101. Palgrave Macmillan, 2017.

MacAskill, William. What We Owe the Future. New York: Basic Books, 2022.

Sam Altman. “Openai Now Generates about 100 Billion Words per Day.” Twitter / X, February 10, 2024.

Stiegler, Bernard. “Foreword.” In On the Existence of Digital Objects, translated by Daniel Ross, vii–xiii. Minneapolis: University of Minnesota Press, 2016.

Wilson, Mark. Physics Avoidance: Essays in Conceptual Strategy. Oxford: Oxford University Press, 2017.

William MacAskill, What We Owe the Future (New York: Basic Books, 2022).↩︎

See, for instance: Gabriele Dürbeck and Philip Hüpkes, eds., Narratives of Scale in the Anthropocene: Imagining Human Responsibility in an Age of Scalar Complexity, Narratives of Scale in the Anthropocene (New York and London: Routledge, 2021), https://doi.org/10.4324/9781003136989; Zachary Horton, The Cosmic Zoom: Scale, Knowledge, and Mediation (Chicago and London: University of Chicago Press, 2021); Gabrielle Hecht, “Interscalar Vehicles for an African Anthropocene - On Waste, Temporality, and Violence,” Cultural Anthropology 33, no. 1 (2018): 109–41, https://doi.org/10.14506/ca33.1.05; Deborah Coen, Climate in Motion: Science, Empire, and the Problem of Scale (University of Chicago Press, 2018), https://doi.org/10.3197/096734019x15755402985677.↩︎

Horton, The Cosmic Zoom: Scale, Knowledge, and Mediation, 49.↩︎

Dipesh Chakrabarty, “Afterword: On Scale and Deep History in the Anthropocene,” in Narratives of Scale in the Anthropocene: Imagining Human Responsibility in an Age of Scalar Complexity, 2021, 226.↩︎

David Gauthier, “Machine Language and the Legibility of the Zwischen,” in Legibility in the Age of Signs and Machines, ed. Pepita Hesselberth et al. (Brill, 2018), 147–65.↩︎

Wendy Hui Kyong Chun, Programmed Visions: Software and Memory (London: The MIT Press, 2011).↩︎

Chun, 23.↩︎

Alex Hanna and Tina M Park, “Against Scale: Provocations and Resistances to Scale Thinking,” in CSCW Workshop ’20 (Virtual: Association for Computing Machinery, 2020), 1.↩︎

Sam Altman, “Openai Now Generates about 100 Billion Words per Day,” Twitter / X, February 10, 2024.↩︎

AA Cavia, Logiciel: Six Seminars on Computational Reason (Berlin: &&& Publishing, 2022), 4–5.↩︎

Andrew Herod, Scale (New York: Routledge, 2011).↩︎

Mark Wilson, Physics Avoidance: Essays in Conceptual Strategy (Oxford: Oxford University Press, 2017), 201–12.↩︎

Wilson, 201–12.↩︎

Bruno Latour, “Anti-Zoom,” in Scale in Literature and Culture, ed. Michael Tavel Clarke and David Wittenberg (Palgrave Macmillan, 2017), 93–101.↩︎

Latour, 101.↩︎

Bernard Stiegler, “Foreword,” in On the Existence of Digital Objects, trans. Daniel Ross (Minneapolis: University of Minnesota Press, 2016), viii.↩︎